In the first of a three-part series of parallel interviews with scientists and engineers participating in Future Labs, Automation & Technology Summit West, Avinash Gill, Ph.D., Senior Principal Scientific Manager, gRED, Genentech, shares the cutting-edge approaches his team takes to drive the discovery of new medicines.

It was a pleasure to interview Avinash! As a biologist focused on advancing a revolution in research output, his depth of knowledge in leveraging lab automation is impressive, and the questions he hopes more people will discuss are thought-provoking. If you’re interested in cutting-edge life science approaches, I encourage you to register for this summit and connect in person.

Increasing research output to develop novel therapeutics

Q1: What is your role, company, and expertise?

As a Senior Principal Scientific Manager within the gRED (Research and Early Development) subdivision of Genentech, my specialization is to lead a group that focuses on generating target antigens for small and large molecule drug discovery projects using a lot of techniques in molecular biology, biochemistry, biophysics, automation and informatics. The group I lead works together very closely to enable a high-throughput automated process for generating antigens that directly funnel into drug discovery projects.

Q2: What types of technologies do you focus on to advance research and discovery?

In terms of technologies, we use a lot of molecular biology, cell culture, biochemistry for protein purification or characterization, and lab automation for miniaturizing and automating a lot of the labor that we do in the labs. We begin with a lot of molecular biology to generate DNA. Constructs that are directly fed into different kinds of cell culture expression platforms. We use insect cell culture, mammalian cell culture and bacterial cell culture to express the proteins. We are also exploring cell-free protein expression now to increase throughput and miniaturize processes even more. Then, at the other end, we use a lot of large-scale cell culture to generate the biomass that eventually goes into generating proteins that are very low expressors. Some of the target proteins can be very, very poor expressors. At this point, we focus on mammalian cell culture, where you have sterility concerns, and as you have to deal with transfection or transduction conditions, sensitivity, titers, and protein toxicity, all of which impact the cells. Baculovirus platforms are a little bit more robust in nature in comparison to mammalian cells, which have stringent culture condition requirements, so that’s a semi-automated process.

We balance achieving speed and throughput while maintaining costs. Because obviously, that becomes a big factor. I would say that on the cell molecular biology side, we take a hybrid approach between what we do at CROs (contract research organizations) to leverage their expertise and technology, and what we do in-house using our automation. On the protein expression aspect, we often work on projects where innovative approaches are required. For instance, changing promoters or changing the backbone in which certain proteins are expressed, because they might be very poor producers. We might need to add inducible expression. Or we might have to tweak the sensitivity of the promoter itself to boost expression. To the point of using different types of R&D technologies, there are many types of adjustments that we have to make along the journey, from getting the construct in a digital format to when we generate the purified protein to hand off to drug discovery project leads.

Q3: Do you see a relationship between biological research, lab automation, and sustainability goals?

That’s a great question. I strongly see that relationship developing in the fact that the pharma industry is currently embracing the value of artificial intelligence and machine learning into its fold, right? With that approach, we have been tasked to generate more data that supports foundational models that ultimately lead to the higher-quality data that machine learning models require to be able to help with drug discovery. As we move in that direction, there is no way that we can achieve the generation of those large volumes of data without the use of automation. That brings these issues into direct sync as we increase the volume of research.

For experiments that tell us how to develop, design, and create a novel therapeutic, especially for challenging targets, scientists need to be able to adopt automation in regular workflows as they proceed along the journey, and sustainability becomes an important factor. As we do more experiments, we are going to be using more energy, more consumables, more space for installing our equipment, more lab reagents and so forth to run our experiments. Miniaturization of experiments is a nice move that helps to conserve some resources because then you're not necessarily always doing hundreds and thousands of liters of culture to get proteins. But there is a trade-off. You have to invest heavily in extremely specialized automation, large instruments that can perform the task in a miniaturized manner, perform it day in and day out, and then also be reproducible and very precise. The use of tips, labware, and reagents increases at that level. It shifts from large-scale containers to hold large volumes of material to smaller-scale containers, but many more of them. This is when sustainability goals and questions come in. Can we reuse certain components? How does the impact of the research quality and reproducibility of the experiments ultimately tie to our sustainability goals?

NK: You’d hope that, relative to the amount of data that you're getting, the inputs are actually lower? In other words, the research work becomes more sustainable.

Exactly, that’s the goal. That’s what we want to achieve by shifting the paradigm, shifting how the work is done, so we can also use AI to make more intelligent predictions of which experiments can succeed so that we have as many shots on goal, but with more information that gives us some predictability, so we don’t take the shots we don’t have to. Based on the trends we have seen and AI helping us model, we can take new approaches. It's not quite like a full alanine scan mutagenesis on a protein, but it’s more like making informed changes in a protein to make it more soluble, to make a valuable target that we could pursue and so on and so forth.

Q4: How do you leverage lab automation in life science research?

Sometimes we are working with engineers to tackle tough challenges that are not necessarily the focus of automation in other fields. For instance, take expressing antibodies versus other proteins. Antibodies are a large therapeutic modality that has been very successful in treating disease for several years.

Let’s say you do a large discovery campaign to try to identify antibodies out of a haystack of candidates, to select for developability, to move forward with, looking at affinity, target, all of those kinds of criteria. We're generally working with mammalian cell expression, but also different expression platforms. By nature, antibodies are very robust molecules secreted by the cells as soluable proteins. We try to reproduce that in a lab environment. If this type of molecule is secreted by CHO cells, and you get lots of it. You then purify it, characterize it, and so on. It follows the same paradigm, right?

Now, when you're working with proteins, we come to a completely different kind of paradigm shift. Antibodies are a subclass of proteins, but most of the protein targets that we work with can be membrane proteins, intracellular proteins or secreted proteins. Each type requires you to treat the cells differently. If you're harvesting membrane proteins, you're taking the membrane and you're working with lipids to try to stabilize them to mimic a cell environment. If you're working with intracellular proteins, you're trying to lyse the cells and work with the intracellular cytosolic contents. You’re trying to culture and harvest the cells to work with them while you discard the culture supernatant. Whereas, if you’re working with secreted proteins, you are throwing away the cells and working with the supernatant. If you add on purification tags or solubilization tags to the protein, it can behave differently. Recombinant protein expression doesn’t mean that you’ll always be successful because proteins, by nature, can be toxic to the cells they are produced by. They can regulate very key mechanisms in a cell. And so the cell itself might not want to produce a lot of that protein. The cell has checks and balances in its mechanisms to control how much protein is produced. Overall, you end up doing a lot of work to get it right. There are huge challenges that proteins face compared to antibodies when you’re designing automation. You can do a lot of automation and develop processes to generate thousands, tens of thousands or millions of antibodies in a single year. To scale up processes to generate even thousands of proteins in a single year becomes a very complex system.

The point is that, depending on the biological problem being tackled, the automation solution for it needs to be creative. It's not that one-size-fits-all. We try to standardize as much as possible, and at the same time we must consider the unique challenges presented by a particular therapeutic modality. If you take RNA molecules as an example, sterility and RNases in the environment become an issue. You can’t do cell lysis in the same lab where you have RNA becuase that would release RNases into the lab. RNA production requires automation equipment that can handle the most delicate steps of the process.

Q5: What makes pursuing this technology worthwhile, and how do you gauge value?

We don't know that we will be automating something off the bat all the time. Scientists come up with very creative solutions and almost all those processes start off as being a manual workflow. Science is constantly evolving. What might not have been relevant three or four years ago suddenly becomes very relevant. The “low-hanging fruits”, that is, the disease targets change. Different classes of proteins have different sets of challenges. From a process standpoint, when it comes to generating experimental data that are reproducible, a process that we know they're going to be in use, day in and day out, that is what has the highest value in being automated.

It’s wise to take a step back to take a high level survey, to ask what is the minimum valuable product that we can generate, or what steps can we automate in a modular fashion? Let’s say a particular step is immutable. It is going to be used by process XY and Z. Regardless of what the source is, regardless of what the final outcome is, we are always going to use these few steps of the workflow. The conversation goes, let's make it as robust as possible. Let's see if we can change it from a manual to an automated process or even a semi-automated process in some cases, and get the maximum value out of removing that bottleneck, right? Because if we don’t automate it that bottleneck is always going to exist, no matter what. The science can change in the beginning or at the end, but we can automate this stage. We have to step back and take a hard look at our processes, see where there are overlaps between different workflows, and then combine or try to combine those steps as much as possible.

It’s an effort to not only take advantage of automation, but also informatics. If you have just automation that's doing your workflow, that's all well and good, but these days, it's about the ability to take information from LIMS platforms (laboratory information management software systems). Driving raw data back into LIMS platforms is key. If you’re doing experimentation with automation, it generally means you’re generating a large volume of data. If everything comes down to a scientist having to pull data off an instrument and put it into a server, it kind of defeats the purpose. The synchronization between automation development and informatics has to happen at the same time.

My approach, which I think will increase our success, is to look at both informatics and automated solutions at a high level and identify key modular steps that can be used over and over again.

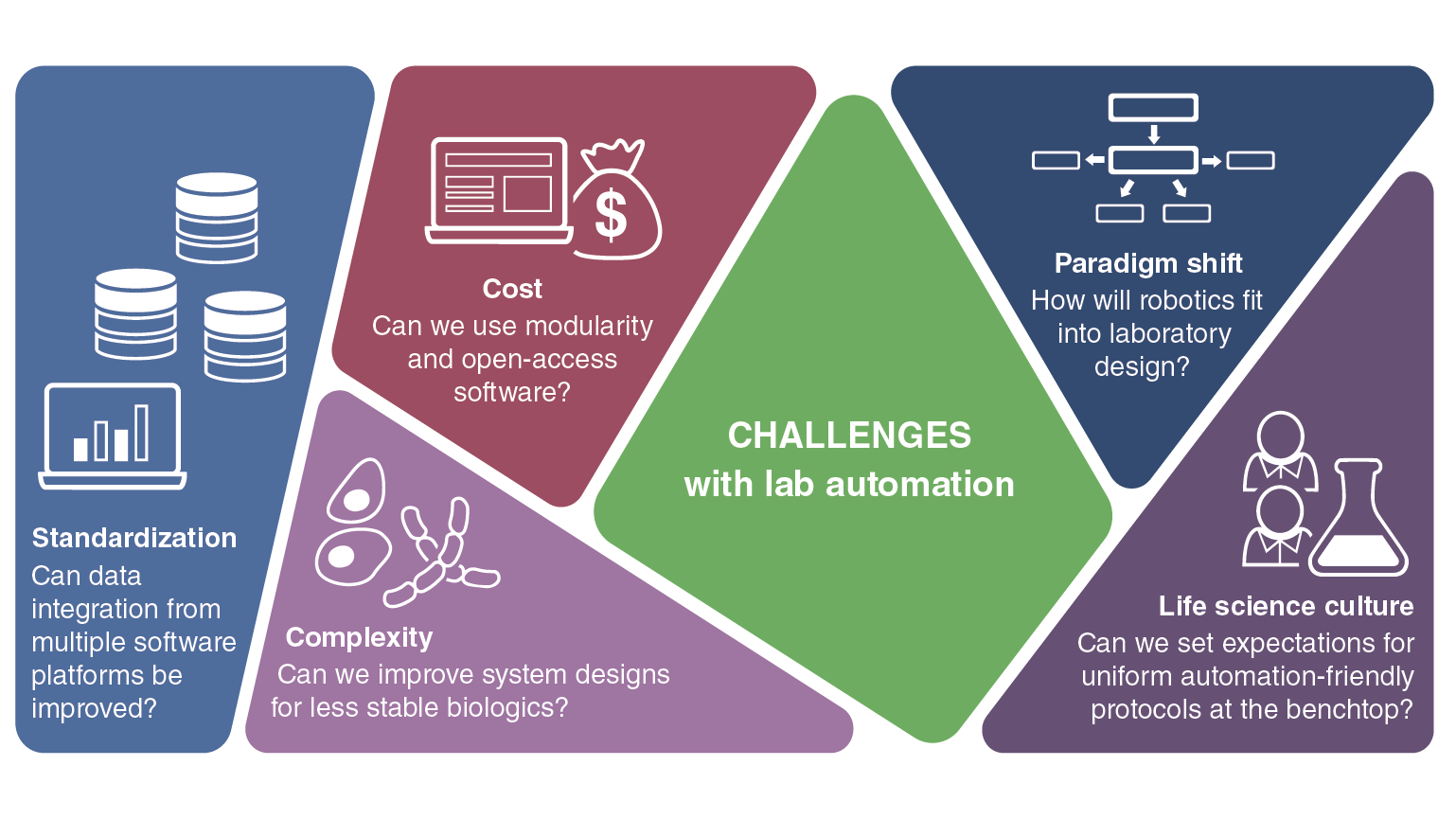

Q6: What are common barriers to integrating lab automation systems?

Cost is a huge one, as I mentioned. Standardization is another example that can help, similarly to applying standard service level agreements and standardization for assay plates. The situation now is that each automation vendor has their own software. For instance, with liquid handlers, a Hamilton, or Tecan, or Agilent or whatever manufacturer, they each have their own software. You need help from people who are specialized in writing scripts in that particular software to be able to use them with robotics. A scientist cannot adopt an automated workflow unless they have an automation engineer in the middle to interpret and set up the workflow for them. It’s not as easy to roll out an automated workflow as needed.

There is also the challenge of standardization between scientists. There might be a scientist in lab one and another in lab two, both working on molecular biology workflows. Let’s say they are both doing PCR. Both are transforming bacteria, growing the cells and then doing minipreps to send samples for sequencing. All of that can be automated. These are standard workflows with many commonalities that can be automated. But, if the two scientists use different labware or conditions in their experiments, it becomes a challenge to automate. Who will compromise? This is a simple example, and many examples are more complex, but the point is that standardizing processes between labs of the same organization can be a challenge, especially for scenarios like adopting barcodes. Scientists name their molecules or experiments to connect with them. When they assign a barcode it becomes machine readable, but not human readable. That’s the challenge because machines and databases don't understand human language. Hopefully that is a barrier that AI can break. Bridging the gap between scientists and machines and using large language models might be used to drive discovery even faster.

Q7: What skillsets help biologists thrive in automation-enabled labs?

Developing an automation mindset is not trivial. By this, I mean being able to envision what an end-to-end automated process could look like, and what changes or tweaks one might need to make to the manual process to fit into an automated workflow can sometimes be slower than what the scientists can do themselves. That's OK because the fact that it is automated gives the scientists back their time and allows them to work on something that is higher value, like reading up on a paper or doing data analysis. Basically, it saves them time from the mundane tasks of moving liquids from one container to another repeatedly. This also makes the experiment more reproducible by eliminating the fatigue that these tasks could induce. I'm not saying it does happen, but it's possible, right?

I think having that automation mindset, even for scientists who are working with automation engineers, would be great. I think having some sort of coding skills, a knowledge of Python or some coding algorithms can help to make small changes in liquid handlers, because we’re not always working on a big complicated work cell. Sometimes we are just working with a simple liquid handler that just has aa 96-well head to move liquids to a 96-well plate. People should be able to do that themselves without feeling intimidated. I think that just being open to those ideas, and thinking about miniaturization and simplifying steps, rather than taking the complicated route, are all basic skills that can help in any setting. These skillsets are becoming an inflection point in the education of biologists.

Q8: How are artificial intelligence algorithms helping in the lab?

Being able to analyze large data sets and design experiments is helpful. These days, we can ask any of the large language models to deep dive into literature to come up with a standardized protocol that can be run for a particular experiment. The types of groups my team works with are heavily into structural biology. They use many protein structure prediction models, like Google's Alpha Fold to predict the structure of proteins. There can sometimes be differences between a model-derived structure and biologically derived structure, but by and large it helps researchers to leapfrog in designing the best experiment to ask the specific questions. In general, plotting large data sets using AI to draw graphs and figures, and synthesizing and summarizing information into a more digestible format, has made it a very powerful tool.

Q9: What is unique about the Future Labs, Automation and Technology (FLAT) summit?

This is the first time I'm attending a Future Labs Automation Technology Summit specifically. However, this event really speaks to an upcoming need in the industry for connecting the dots in how AI can help automation, and informatics, for that matter, help how we tackle biotech research and biopharmaceutical research, going forward. More and more people are thinking about this challenge. If you look at the automotive industry, or if you look at other sectors of technology where they have been adopting AI very fast and building robotics to leverage the power of AI, the pharma industry still has to play a little bit of catch up. I think a summit like this helps to bring together those minds that allow for the dialogue and the education that promote the thoughts that can help design our future labs.

Q10: Can you envision a future with an artificial intelligence scientist or lab technician supporting projects?

I'm really excited about this concept, actually. Even though right now we can design technology systems, (and we have automation vendors that we work with to do so), that are running pretty much 24x7, there are still many, many areas in research, especially in basic research, where experiments are scheduled around people’s work schedules. Often, scientists find a logical step to stop in the evening to go pick up a kid or catch a shuttle. After all, work-life balance is a thing. Or sometimes people get sick. People can’t work continuously and should take breaks. The continuity of experiments can be reduced. This is where a physical AI agent, like an autonomous robot, for instance, can potentially serve as a partner to scientists. Scientists could then focus more on the high-value work of experiment design. In this activity, AI agents could offer suggestions. The software could access a database and extract relevant information like aggregating experiments that have been done across the organization so that scientists can make more informed decisions and designs. AI could offer better hypothesis testing and then a physical agent can help to execute the experiment. A physical AI robot that could go between different instruments in the lab, taking the plates out of one and putting them into another, or taking out temperature-sensitive reagents from refrigeration, exactly when needed in an experiment.

Q11: What do you wish more people would talk about in lab automation?

How will robotics impact us? I attended a conference a few days ago, where someone predicted that there will be more robots than humans on Earth by 2040. It was an eye-opening kind of remark. The real question to grapple with is how do we adopt robots in the pharma industry. How does the scientist tackle change management? How do we accommodate robots in our midst? How will workplaces change in the biotech and pharmaceutical industries? How do we get all of this right?

Interested in learning more perspectives?

Connect in person at the Future Labs Summit at the Hadley Hotel in San Diego, California, and read our other Q&As on lab automation and sustainability!

Part two features Ilja Kuesters, Ph.D. Generate:Biomedicines, whose group builds “agent augmented” labs that use software assistants to help design and plan experiments, schedule equipment, and capture auditable data. And part three features an interview on Lab Automation and Integration Platforms with Yousef Baioumy, an Automation Engineer from Adaptive Biotechnologies™ and Jesse Mayer, Ph.D., Senior Field Application Scientist at Automata.

Use the discount code: PARTNER15